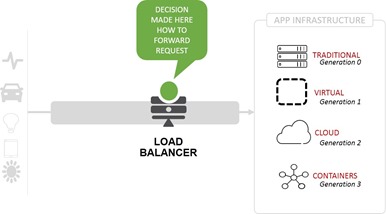

Load balancing is (almost) as old as the Internet. From the network layer to TCP to HTTP, incoming packets, connections, and requests are routinely distributed across resources to ensure application performance and availability.

The differences might seem pedantic but they’re actually pretty important as they have a direct impact on the types of deployment patterns you can implement. After all, if you don’t have visibility into HTTP, you can’t do more advanced patterns that rely on things like URI or content type.All load balancing requires that a decision be made as to where to forward the packet/request. This is where the distinction between network, connection, and application load balancing become important. Each make decisions based on specific characteristics that can enable or impede your ability to implement certain types of deployment patterns.

Network Load Balancing (NLB)

Network load balancing, appropriately, relies on network layer information. Source and destination IP along with TCP port (in some cases) form the basis for decisions.

When the network load balancing service receives a request, it typically hashes the source and destination IP (and TCP port) and then chooses a target resource. The request is then sent on to the resource.

Network load balancing has the advantage of being the fastest decision maker of all load balancing algorithms. It has the disadvantage of failing to balance traffic. What that means is that NLB is more about routing traffic than it is balancing it across resources. It tries, but there are situations in which it fails because it suffers from the ‘mega proxy’ problem.

The mega-proxy problem occurs when significant amounts of traffic originate from the same range of network addresses. This results in all the traffic being sent to the same resource, because there isn’t enough differentiation in the hashed variables to distribute across multiple resources. Astute developers will recognize this as a collision, a common problem with hashing-based algorithms. The issue with collisions is exacerbated by the fact that performance is impacted by load on the target resource. As load increases (on any system), performance decreases. That’s Operational Axiom #2.

So if you’re going to use this one for corporate consumption, you may experience poor distribution and thus sporadically poor performance. That’s because most corporate traffic is all coming from the same, small range of IP addresses. For consumer consumption, however, this should not be a problem.

You also can’t direct traffic based on app (or API) versions or use it to shard API traffic across multiple services, because it does not have the ability to factor in HTTP-based variables, like URI or cookies.

Plain Old Load Balancing (POLB)

Plain old load balancing (POLB) is the original form of load balancing in which actual load balancing algorithms you’re familiar with come into play. Round Robin. Least Connections. Fastest Response. These are the stalwart algorithms of scale that are still used today.

POLB is protocol-based and supports UDP (for streaming) and TCP (connection-oriented). It decisions are based on the algorithm selected, and nothing more.

Plain old load balancing receives a request and selects from a pool (sometimes called a farm or a cluster) of resources based on the algorithm. Then it forwards the request and gets out of the way.

The benefit of this type of load balancing is that it’s relatively fast and has a variety of algorithmic options to choose from. If performance is paramount, choose fastest response. If you just want to scale out quickly and easily, choose round robin.

The drawback of plain old load balancing is that you can only implement deployment patterns if the decision is based on something in the HTTP headers (like a cookie or URI). Depending on the load balancing service, you can implement patterns like A/B testing using variables like time or counters to determine which resource to select. It’s not necessarily as elegant a solution as using HTTP load balancing, but you can still achieve the same kind of results.

Plain Old Load Balancing may or may not be transparent, depending on the configuration. With NLB, you are assured that the IP address of the client (user, device) received by your app is the actual, real IP address of the client. With some configurations of POLB, the IP address received by your app is actually the IP address of the proxy providing the load balancing service. That means a little more work in your app to pluck out the real client IP address. So if you need that information, you should be aware that it may require you dig in the HTTP headers to find it.

HTTP Load Balancing

HTTP load balancing is nearly as old as POLB. The earliest form of HTTP load balancing was introduced back at the turn of the century and has only continued to expand in capabilities since then. Today, you can make load balancing decisions based on just about anything that comes with an HTTP request – from headers to payload, HTTP load balancing offers you the greatest flexibility of the three load balancing types.

HTTP load balancing requires an HTTP request, and in most implementations actually makes two different decisions. The first is based on an HTTP variable and the second is based on an algorithm.

To be completely accurate, HTTP load balancing is actually a combination of routing and forwarding. That is, it first routes a request and then forwards the request based on the algorithmic selection of a resource. This is what enables deployment patterns like Canary and Blue/Green Deployments and more robust A/B testing.

The issue with this type of load balancing is that it does add latency to the equation. The deeper into the HTTP request you go, the more latency adds up. Some load balancers have a “fast” mode that only allows load balancing based on HTTP headers that remediates this issue, but be aware that if you’re trying to make decisions based on some POST variable hidden deep in the HTTP payload that it will take more time.

The other issue is shared with POLB – that of transparency. You may or may not be getting the actual IP address of the client with each request, so be sure to check if you need that information in your app.

Choose your load balancing to match both your application architecture and specific goals with respect to scale and speed. Choosing the wrong load balancing – and algorithm – can have a significant impact on your ability to meet those goals.